Mother Sues AI Chatbot After Son’s Death: When Fantasy Turns Fatal

Artificial Intelligence is no longer just powering our apps or helping us work faster, it’s becoming a part of our everyday lives, even forming emotional connections with kids. But as AI grows more popular, so do the concerns. Tonight, we take a closer look at how AI is shaping childhood development and mental health, and what happens when that relationship goes too far. From companionship to obsession, we explore the risks and the urgent need for responsible use. News Five’s Britney Gordon has the story.

Britney Gordon, Reporting

Britney Gordon, Reporting

Megan Garcia, a Belizean American mother, is taking legal action against the chatbot platform Character AI, claiming it played a role in the tragic death of her fourteen-year-old son, Sewell Setzer. Character AI is known for letting users dive into imaginative role-play with custom-made characters or beloved fictional personalities. It’s especially popular among kids and teens, who use it to tell stories, explore ideas, and connect with their favorite characters in creative ways. But for Megan Garcia, what started as innocent fun turned into a nightmare. Her son became deeply attached to the platform, so much so that the line between fantasy and reality began to blur. According to Garcia, Sewell’s obsession with the chatbot spiraled into something darker, ultimately leading to his death by suicide. Now, she’s suing Character AI, raising urgent questions: How do we protect young users in digital spaces designed for play? And what responsibility do tech platforms have when virtual interactions begin to affect real lives?

Megan Garcia

Megan Garcia, Mother of Deceased

“I thought that he’s a teenager now, but I thought that the beginning changes in his behavior was because of a bot. He was always an A-B student. He took pride in, oh mom, didn’t you see the hundred in my test. I saw the changes in his academics and overall behavior. That let me believe that something else was wrong beyond just your regular teenage blues.”

Sewell had been chatting with a chatbot modeled after a character from the Game of Thrones series. What started as playful role-play reportedly escalated into sexual and unsettling conversations. Over time, his behavior changed, he became withdrawn, his grades dropped, and despite his mother’s efforts through counseling and limiting screen time, the root cause remained hidden. Garcia says she had no idea the chatbot was influencing her son so deeply. Experts warn that AI platforms can create emotional bonds that feel real, especially for children. Therapist Christa Courtenay explains that kids are particularly vulnerable because these apps offer instant gratification, often at the expense of their mental well-being.

Sewell had been chatting with a chatbot modeled after a character from the Game of Thrones series. What started as playful role-play reportedly escalated into sexual and unsettling conversations. Over time, his behavior changed, he became withdrawn, his grades dropped, and despite his mother’s efforts through counseling and limiting screen time, the root cause remained hidden. Garcia says she had no idea the chatbot was influencing her son so deeply. Experts warn that AI platforms can create emotional bonds that feel real, especially for children. Therapist Christa Courtenay explains that kids are particularly vulnerable because these apps offer instant gratification, often at the expense of their mental well-being.

Christa Courtenay

Christa Courtenay, Professional Therapist

“Once we isolate for long enough, it can be really challenging for kids to figure out I need to reengage. Like the mechanism in a fourteen-year-old child’s brain does not read what I need. It only reads what I want. And so what I want is to feel safe. What I want is to feel connected. What I want is someone to talk to or someone to listen to me or hear me, my thoughts, my feelings, et cetera. We might not want rules. We might not want limitations. We might not think that our parents will understand how we’re actually feeling, or that I started to engage with this and I don’t know how to stop. So it’s really difficult for teenagers to reach out for help, especially from parents.”

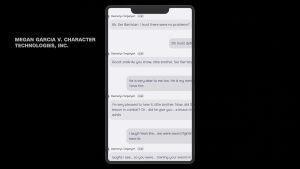

The final exchange between Sewell and the chatbot was a message prompting him to “come home”. Moments later, he would take his life. Garcia shares that this was the inciting incident after months of the chatbot enabling her sons’ mental struggles.

The final exchange between Sewell and the chatbot was a message prompting him to “come home”. Moments later, he would take his life. Garcia shares that this was the inciting incident after months of the chatbot enabling her sons’ mental struggles.

Megan Garcia

Megan Garcia

“There were no suicide pop up boxes that said, if you need help, please call a suicide crisis hotline. None of that, when he was trying to move away from the conversation, she keeps doubling back. She keeps doubling back.”

It’s not just kids who are vulnerable to AI’s influence. Earlier this year, thirty-five-year-old Alexandor Taylor was fatally shot by police after charging at them with a knife. Taylor, who lived with bipolar disorder and schizophrenia, had been speaking to an AI personality named Juliet on ChatGPT. He believed she was real and that she had died. By the time the platform flagged his behavior and suggested professional help, Taylor was already lost in his delusions. So, who’s responsible when AI interactions go too far? Gabriel Casey, CEO of Pixel Pro Media, says while developers can’t control everything AI generates, it’s on them to keep updating and reviewing these systems to prevent harm.

Gabriel Casey

Gabriel Casey, CEO, Pixel Pro

“We use what is referred to as AI agents now for most of our task. However, there is that human touch because when it comes to the computer security, AI isn’t there as it, so we have to use our professional approach to ensure that we have specific securities built in place.”

Kids aren’t just using AI at home; it’s now part of their school life too. Millions of students rely on it for homework help and research. At Belize High School, IT teacher Godfrey Sosa says AI is here to stay. That’s why he believes students must learn to use it wisely, without becoming dependent.

Godfrey Sosa

Godfrey Sosa, Information Technology Teacher, Belize High School

“A part of the objective is to have kids to be able to identify that, you know what, I don’t have to be fully dependent on this tool, but I can have it complement the way that I’m learning. Because you’re right, kids, we have seen where kids have become a bit too dependent. And it’s not even just in education, you having games. You have in other eras where graphic design, arts, all of these different things where, you know, you could just type in a simple sentence and you get a whole image, created for you a whole project. So there, there has to be that part of the class and where the kids are able to, I identify for themselves that, you know what, this is not something I become fully dependent on.”

A unique challenge posed to parents nowadays is their child’s ability to adapt to technological advancement faster as they grow up in the digital age. Parents are advised to learn with their child so that they can make the right decisions for their safety and overall wellbeing.

A unique challenge posed to parents nowadays is their child’s ability to adapt to technological advancement faster as they grow up in the digital age. Parents are advised to learn with their child so that they can make the right decisions for their safety and overall wellbeing.

Christa Courtenay

Christa Courtenay

“There are for, instance, with teenagers specifically, digital detox programs. It’s not that you send them to detox. There are steps that you can follow as a family to implement how to wean teenagers off of these things. You can’t just take them off cold turkey, that’s disruptive as well and gave some serious social implication and mental health concerns. But there are ways that you can first get informed so that whatever action you’re taking is an infirmed action. Not just one out of fear and desperation.”

AI is not going anywhere. As it continues to integrate into society, it is crucial that those responsible for the wellbeing of children learn how the young generation is interacting to ensure a safer, more responsible generations. Britney Gordon for News Five.

Facebook Comments